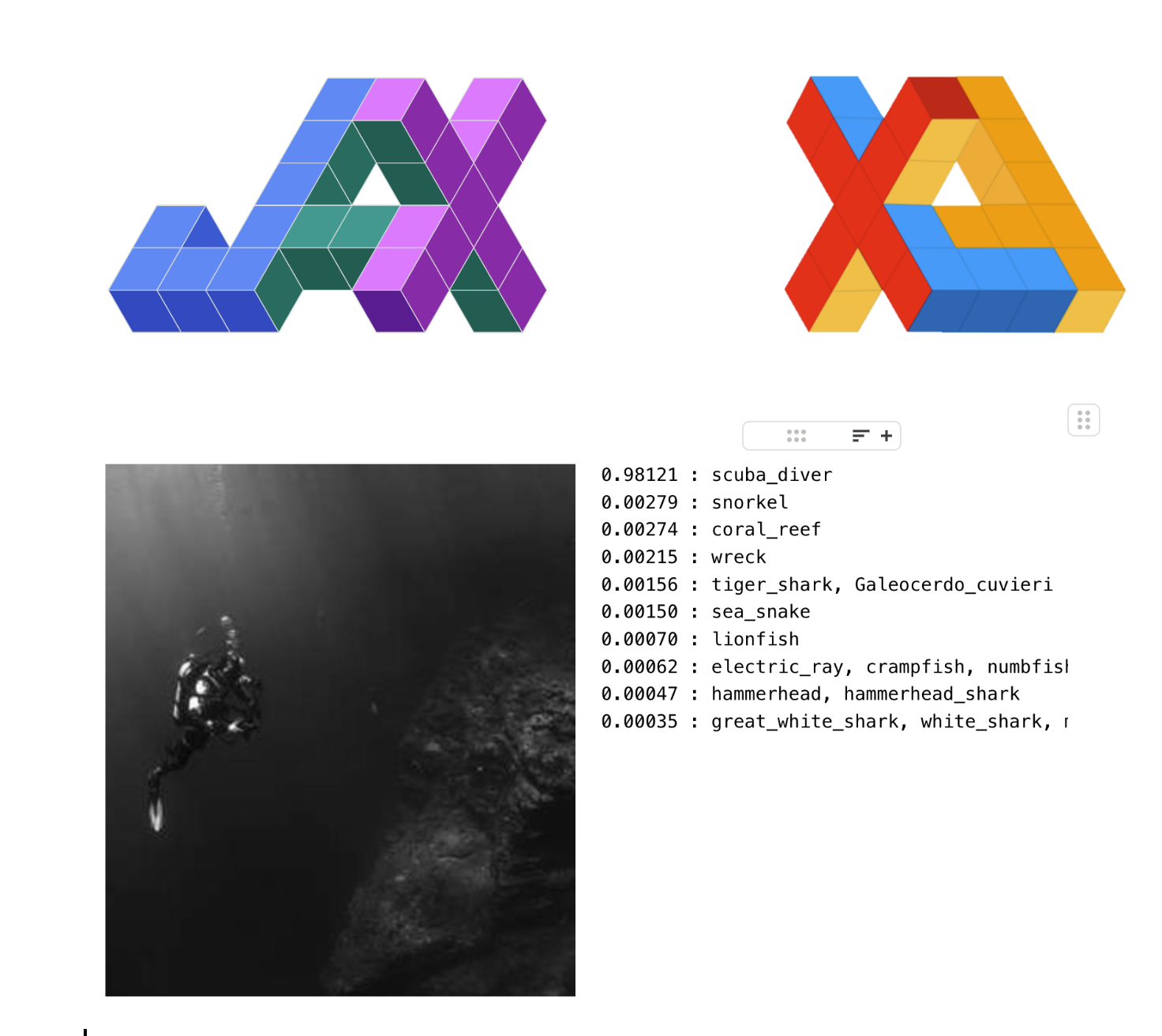

Turbocharge ML with JAX and TPUs

Welcome

JAX and TPUs are powering developers and researchers to speed up machine learning training workloads and have more fun in the process.

You’ll learn JAX’s elegant, functional style and transformations to super charge code that will look familiar to anyone who has worked with Python and NumPy.  We will build upon taking derivatives and just-in-time compilation to linear regression and basic image recognition models.

We will build upon taking derivatives and just-in-time compilation to linear regression and basic image recognition models.

In later stages of the course, we will run cutting edge models including Stable Diffusion from Hugging Face, and Google Research’s Vision Transformer.

We hope you enjoy the course and start experimenting!